It makes swapping GPUs marginally easier and might make it possible to have slightly tighter internal case dimensions but I agree with the apparent downsides seeming to significantly outweigh the perks.

I’m going to copy two comments from TPU that i think are the most accurate regarding this proposal:

This doesn’t solve anything at all. You still have to plug power into the motherboard, likely via the problematic 12V6X2 connector once they extend it beyond 250W. Now you also need to dedicate additional (expensive) PCB layers and throw a lot more copper traces (expensive) at it too. It’s just a glorified extension cable that adds another connector to the equation. Why go from the PSU directly to the GPU when you can now do it with extra steps!! (/s) Cable still required, but now you need a new motherboard, too… Why? Because cables are evil, apparently and this fits the dumb BTF form factor that’s all about form over function, for people with infinite wallets.

This is a stupid idea. It should be separate as always. It also makes problems worse if connector-gate happens again, as now you fry a much larger PCB instead of tiny GPU board. And high power means you need to worry about heat on the mobo now too. It makes repair difficult too. But I’m not surprised. Asus always sucked on the user friendly aspect. Worst customer support. Apparently that’s the trend to keep up with the “You’ll own nothing and be happy” motto of stream everything, throwaway everything, get in debt all the time mentality.

Right?

How about someone make much higher quality power cables (I’ll pay more, happily), that are more flexible and configurable.

Yeah, it doesn’t make sense.

I could understand the rationale for wanting a high-power PCIe specification if there were multiple PCIe devices that could benefit from extra juice, but it’s literally just the graphics card.

One might make the argument “Oh but what if you had multiple GPUs? Then it makes sense!” except it doesn’t, because the additional power would only be enough for ONE high-performance GPU. For multiple GPUs you’d need even more motherboard power sockets…

It’s complexity for no reason, or purely for aesthetics. The GPU is the device that needs the power, so give the GPU the power directly, as we already are.

I could understand the rationale for wanting a high-power PCIe specification if there were multiple PCIe devices that could benefit from extra juice, but it’s literally just the graphics card.

There was a point in the past when it was common to run multiple GPUs. Today, that’s not something you’d normally do unless you’re doing some kind of parallel compute project, because games don’t support it.

But it might be the case, if stuff like generative AI is in major demand, that sticking more parallel compute cards in systems might become a thing.

Did you read the rest of my comment, then?

Paraphrased, that multiple graphics cards would mean multiple extra power sockets on the motherboard because one isn’t enough, and so it doesn’t solve much.

I was around in the crossfire era and I’m here for the AI one, so I can totally see the use-case for a convenient solution for multiple GPUs. I just don’t think this is it.

But it might be the case, if stuff like generative AI is in major demand, that sticking more parallel compute cards in systems might become a thing.

Then you could be looking at multiple kilowatts being supplied by the motherboard. It would need large busbars if they stuck with 12V.

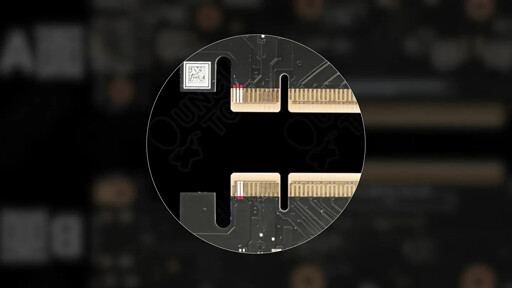

Interesting that it maintains compatibility both ways. Other companies have done stuff like this in the past. I know Apple GPUs that had ADC had an extra pin further down from the connector. But that extra foot could get in the way of components.